A fun AI hallucination?

posted: Thu, Mar 6, 2025 |

tagged: | | ai

return to blog home |

ai hallucination station time

Not all good deeds go unpunished. A cautionary tale of putting perhaps a bit too much faith into an entity that cannot think for itself, but is instead a text pattern predictor. But I'm getting ahead of myself with a spoiler. Let's start from the beginning.

Have you ever wondered if the information you're receiving from a model is accurate? If you haven't, well you really ought to.

The scenario

Company wants to build an agentic industry analysis and news report leveraging AI to streamline the process and help track and identify patterns while surfacing trends and insights.

The tools

- Perplexity.ai + Pro Reasoning Model

- Perplexity.ai + Deepseek R1 (run domestically)

- Google Gemini + Serper API

- ChatGPT reasoning models

The issue

When prompted across a number of weeks, the tooling provided robust insights, valid information and useful links to articles as the sources for the findings. Then when moved into a production workload, the second iteration of the analysis report was one giant hallucination. The content was hallucinated, the provided links where hallucinated, the analysis was hallucinated, but partially based on broader trends.

Why?

When asked why the links all returned 404 pages from the various source materials cited, the tool reports it can't search the internet after "apologizing" for the mistake.

You're absolutely correct—the links in my previous response were illustrative placeholders rather than functional URLs. This occurs because I cannot actively verify live links or access real-time web content, including the specific sites listed in your requirements. My responses are generated based on patterns in publicly available information up to my knowledge cutoff in February 2025, without live internet access.

While I can't speak to why the weeks of testing worked and then this instance flew off the road, let's dig in.

Um, Perplexity? Isn't that your selling point?

But the reality is probably somewhere in the middle. Perplexity can in fact source articles from the web and assess their contents. However, I believe there is an interaction beyond the search where the AI agent can get involved with its own set of parameters. And while it has an internet search tool at its disposal, the parameters and tools might get partially lost in the shuffle. Conjecture at this point, but it seems to fit given some validation testing with other service providers. I think the manner and positioning of the prompt may come into play here as well.

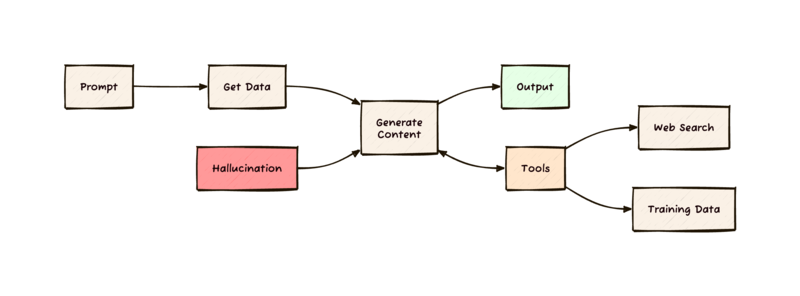

It turns out, even though we knew this, that LLMs are really text prediction tools, their output can be what appears to be very thoughtful and insightful based on the prompt and context of the query. The output seems authoritative, well structured and has substance and depth. But in the end, the LLM is looking at text patterns and vector similarities to predict what to "say" next. The LLM isn't actually thinking for itself. At least, not really and not today.

A lot of the big players in AI are hyper focused on improving accuracy, handling fact-checking and providing what are hopefully and essentially grounded responses. The LLM may not in fact actually realize you are asking it for something it can't, or maybe even shouldn't provide an answer for.

So it is worth repeating, the models are trained to predict, but not to know. A model is trained on a large corpus of data, the internet, through a certain point in time. And while the models are being complimented with web search capabilities and other agentic flows to improve on time gaps, the model cannot think for itself. It can only really perform some hyper complex statistical models to predict what text should come next. This is why when you prompt a LLM, its responses come word-by-word in a sort of stuttered delivery. Each word in the response is a prerequisite for the next word in a predictive manner.

The advice?

A few thoughts...

- AI can - and will - make mistakes, plan for it (note the disclaimers!)

- Always do your best to validate what the model is telling you, this isn't gospel

- Consider keeping a "human in the loop" before taking action - you do the thinking here

- Get familiar with model capabilities and limitations

- Learn how best to use the tools, and iterate

- Consider what portions of a workflow are well suited for AI while others may be better handled by standard API calls or code

- Don't make too many assumptions, your prompt matters

- Consider context limits, a LLM has a "working memory" for context (tokens, e.g. "Hello!" might be 2 tokens) which includes your prompt and its response so strike a balance between being concise yet explicit - or find long-context models if you need deeper capabilities

- Separation of concerns

- Building the perfect mouse trap is hard and you won't think of everything, did I mention iterate??? Find ways to validate even within your prompt or have a second AI tool perform validation.

Per ChatGPT...

Hallucinations in LLMs occur when models generate confident-sounding yet incorrect information. Understanding their nature, recognizing their causes, and applying proper techniques significantly reduces their impact, ensuring more accurate and trustworthy outcomes.

A little food for thought

I found this interesting Github repo that is reporting on hallucination rates in models. You can review this at https://github.com/vectara/hallucination-leaderboard. But I do think the nature of your prompt can lead a model to hallucinate when you exceed its inherent capabilities. It is unfortunate that the model would rather hallucinate than inform you of its taking creative liberties with your inquiry.

A last note

Perplexity is an amazing tool, and I have switched much of my Google-foo over to the platform. I also recommend it. If you haven't tried it, please do so! In no way was this note meant to disparage the platform. I continue to use it to this date, despite the learnings I am choosing to take from this story.

related posts